With companies increasingly using AI for decision making in everything from pricing to recruitment, “Addressing the problem of algorithmic bias”, explores how these decision-making systems can result in unfairness.

The technical paper also offers practical guidance for companies to ensure that when they use AI systems, their decisions are fair, accurate and comply with human rights.

The paper, a first-of-its-kind in Australia, is the result of a collaboration between the Australian Human Rights Commission and Gradient Institute, Consumer Policy Research Centre, CHOICE and CSIRO’s Data61.

“Human rights should be considered whenever a company uses new technology, like AI, to make important decisions,” said Human Rights Commissioner Edward Santow.

“Human rights should be considered whenever a company uses new technology, like AI, to make important decisions”

Edward Santow, Human Rights Commissioner

“Artificial intelligence promises better, smarter decision making, but it can also cause real harm. Unless we fully address the risk of algorithmic bias, the great promise of AI will be hollow.”

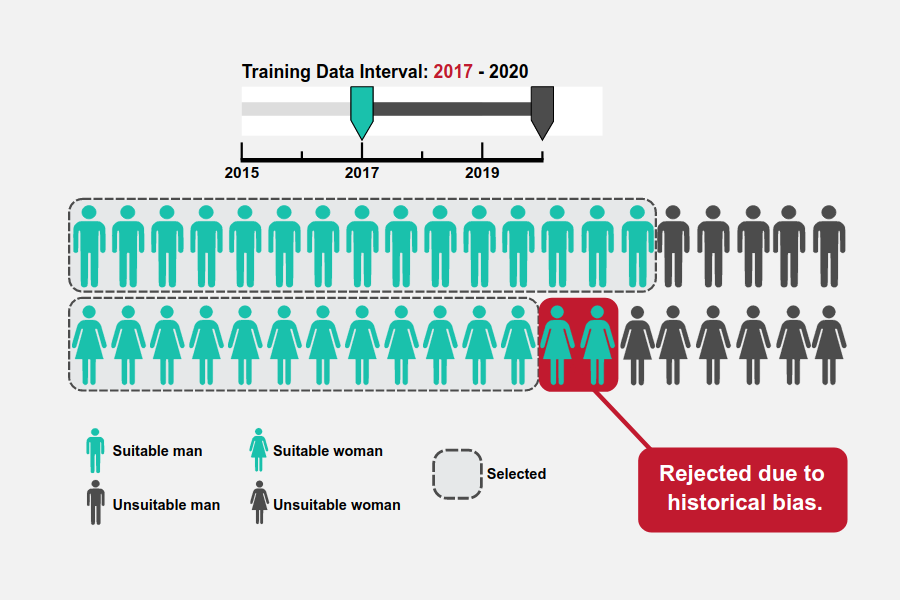

Algorithmic bias can arise in many ways. Sometimes the problem is with the design of the AI-powered decision-making tool itself. Sometimes the problem lies with the data set that was used to train the AI tool. It often results in customers and others being unfairly treated.

“The good news is that algorithmic biases in AI systems can be identified and steps taken to address problems,” said Bill Simpson-Young, Chief Executive of Gradient Institute.

“Responsible use of AI must start while a system is under development and certainly before it is used in a live scenario. We hope that this paper will provide the technical insight developers and businesses need to help their algorithms operate more ethically.”

“Strong protections are needed for Australian consumers, who want to know how their data is collected and used by business,” said Lauren Solomon, CEO of the Consumer Policy Research Centre.

“Businesses should ensure the lawful and responsible use of the decision-making tools they use and this new technical paper highlights why that is so important.”

“Businesses should proactively identify human rights and other risks to consumers when they’re using artificial intelligence systems,” said Erin Turner, Director of Campaigns at CHOICE.

“Good businesses go further than the bare minimum legal requirements. They think about consumer outcomes at every step to ensure they act ethically and that they retain the trust of their customers.”

“Organisations need to take a responsible approach to AI,” said Jon Whittle, Director at Data61, the data and digital specialist arm of Australia’s national science agency, CSIRO.

“This includes the rigorous design and testing of the algorithms and datasets, but also of software development processes as well as proper training in ethical issues for technology professionals. This technical paper contributes to this critically important area of work and complements well other initiatives such as Data61’s AI Ethics Framework.”

Addressing Algorithmic Bias in AI – Why It’s Important

- Edward Santow, Human Rights Commissioner, Australian Human Rights Commission

- Erin Turner, Director of Campaigns and Communications, CHOICE

- Bill Simpson-Young, Chief Executive, Gradient Institute

- Lauren Solomon, Chief Executive Officer, Consumer Policy Research Centre

REPORTS, IMAGES AND BACKGROUND AVAILABLE

- “Using artificial intelligence to make decisions: Addressing the problem of algorithmic bias” | Download the report here

- Fast Facts | Media backgrounder

- Photo Gallery | Images for media use

For more information and interviews with the Human Rights Commissioner, contact Liz Stephens | +61 430 366 529 | =:K]DE6A96?Do9F>2?C:89ED]8@G]2F

For information on technical aspects of the report, and to interview Gradient Institute researchers, contact Wilson da Silva | +61 407 907 017 | H:=D@?o8C25:6?E:?DE:EFE6]@C8

ABOUT THE PARTNERS

Australian Human Rights Commission is Australia’s National Human Rights Institution. It is an independent statutory organisation with responsibility for leading the promotion and protection of human rights in Australia.

Gradient Institute is an independent non-profit that researches, designs and develops ethical AI systems, and provides training in how to build accountability and transparency into machine learning systems. It provides technical leadership in evaluating, designing, implementing and measuring AI systems against ethical goals.

Consumer Policy Research Centre (CPRC) is an independent, non-profit, consumer think-tank. We work closely with policymakers, regulators, academia, industry and the community sector to develop, translate and promote evidence- based research to inform practice and policy change. Data and technology issues are a research focus for CPRC, including emerging risks and harms and opportunities to better use data to improve consumer wellbeing and welfare.

CHOICE Set up by consumers for consumers, CHOICE is the consumer advocate that provides Australians with information and advice, free from commercial bias. CHOICE fights to hold industry and government accountable and achieve real change on the issues that matter most.

CSIRO’s Data61 is the data and digital specialist arm of Australia’s national science agency. We are solving Australia’s greatest data-driven challenges through innovative science and technology. We partner with government, industry and academia to conduct mission-driven research for the economic, societal and environmental benefit of the country.