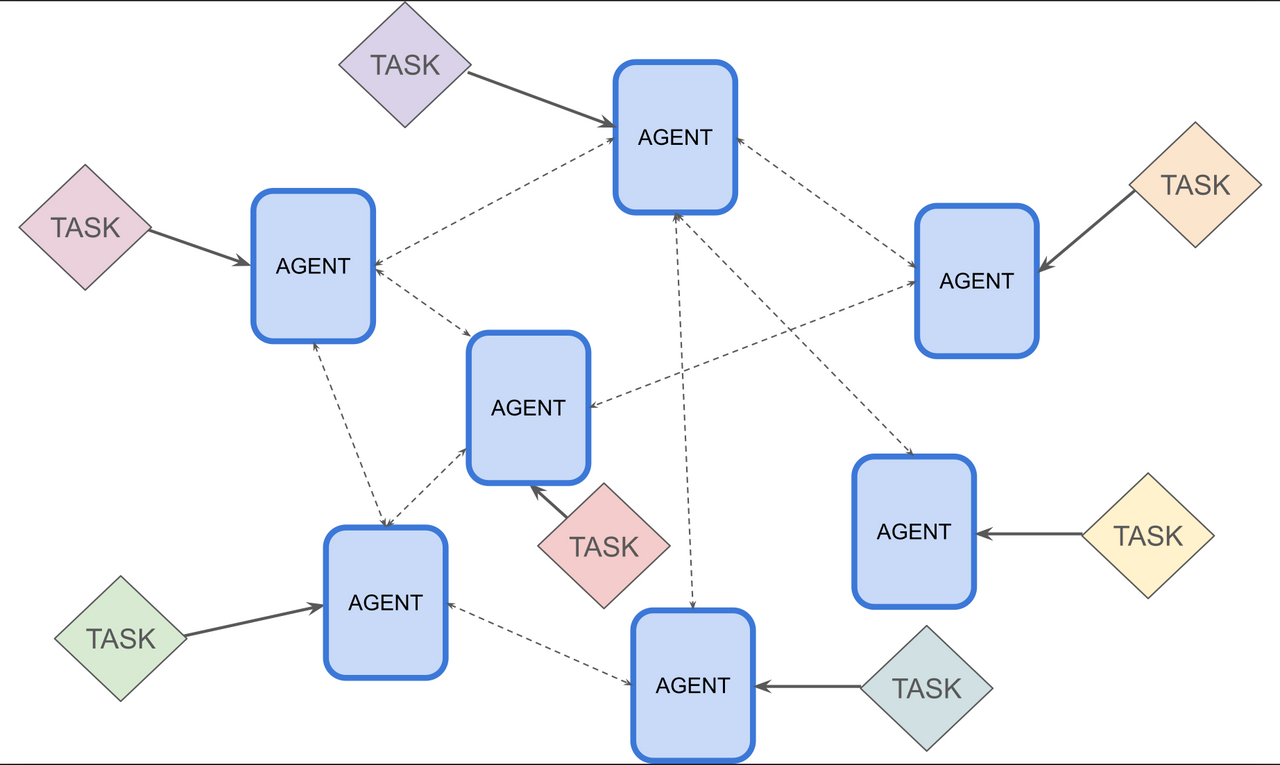

Organisations are starting to adopt AI agents based on large language models to automate complex tasks, with deployments evolving from single agents towards multi-agent systems. While this promises efficiency gains, multi-agent systems fundamentally transform the risk landscape rather than simply adding to it. A collection of safe agents does not guarantee a safe collection of agents – interactions between multiple LLM agents create emergent behaviours and failure modes extending beyond individual components.

This report provides guidance for organisations assessing the risks of multi-agent AI systems operating under a governed environment, such that there is control over the configuration and deployment of all agents involved. We focus on the critical early stages of risk management – risk identification and analysis – offering tools practitioners can adapt to their contexts rather than prescriptive frameworks.